LLMs to PLMs through MCPs (Model Context Protocol): A New Era of Product Development with AI Agents

In this article, let’s look at the new era of Product Development with AI Agents.

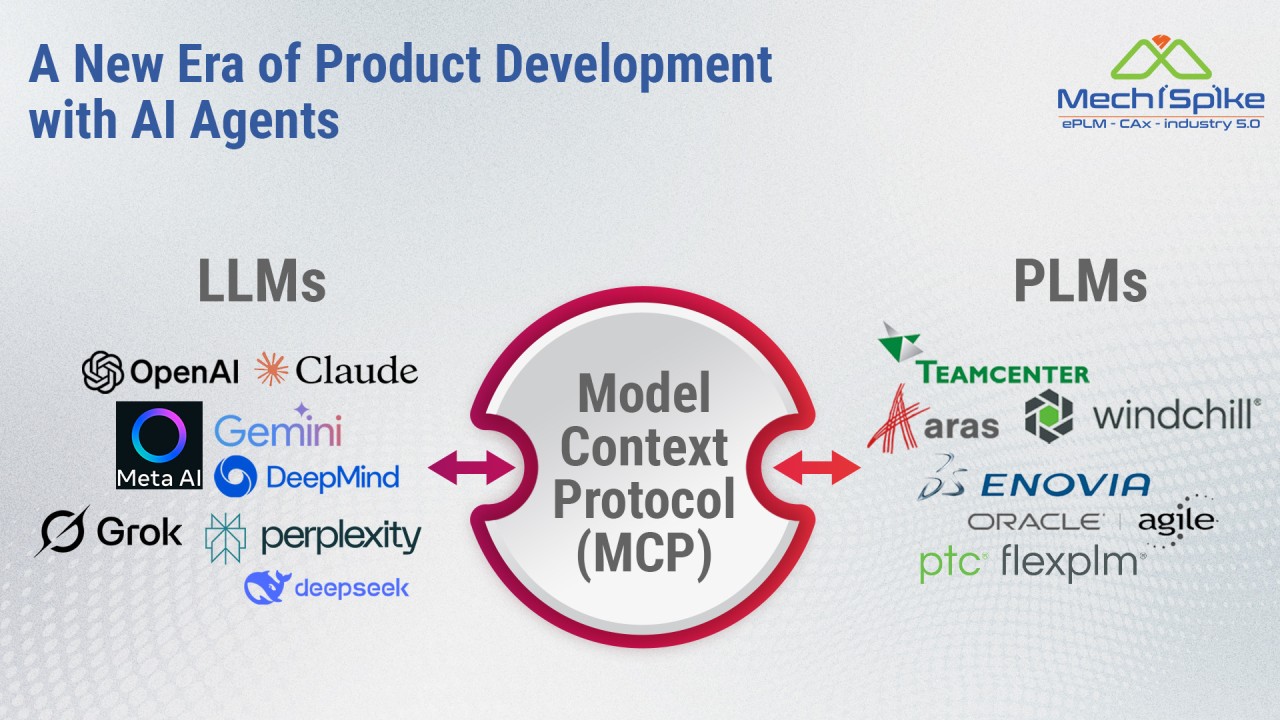

A New Era of Product Development with AI Agents

AI is out in the form of LLMs and the tsunami of AI Agents is now getting ready to fundamentally transform everything that we do, including – Product Development. – the main focus of this newsletter.

Product Lifecycle Management (PLM) systems serve as the digital backbone for managing the lifecycle of a product from concept to disposal.

However, as product data becomes more complex and distributed, and as AI Agents are destined to become more integral to workflows, there is a growing need for a standardized way to connect LLMs (who run these AI Agents) to the systems where the data actually lives – like PLMs.

That’s what will now be done using Model Context Protocol (MCP) — an emerging open standard that could revolutionize how AI interacts with any system of your choice.

What Is MCP (Model Context Protocol)?

The Model Context Protocol (MCP) is a new open-source standard developed to allow (LLMs) and autonomous AI agents to access enterprise data in real-time with contextual understanding. It creates a structured, permission-aware bridge between AI assistants and enterprise systems such as PLM, ERP, MES, and others.

In simple terms, MCP enables LLMs to ask the right questions to the right systems — and get precise, real-time, and actionable answers — by understanding the context of the task, the role of the user, and the underlying data model of the connected systems.

MCP defines:

- How AI assistants discover and understand data schemas

- How they request specific data or operations

- How context (user role, task, system state) is shared

- How permissions, security, and privacy are managed

This standard is being championed by leaders in the open-source and enterprise AI communities and is seen as a critical enabler of AI adoption in data-sensitive industries like manufacturing.

How MCP Helps PLM Systems

PLM systems are rich in structured and unstructured data. They host everything from CAD models and Bills of Materials (BOMs) to engineering change orders and compliance documentation.

However, accessing and making sense of this data—especially across silos—has traditionally been cumbersome. LLMs, when connected via MCP, can change that.

Here’s how MCP benefits PLM systems:

- Contextual Intelligence MCP enables AI agents to understand the PLM system’s structure, terminology, and user permissions.

- Real-time Interaction Rather than syncing or duplicating data to another system, MCP allows LLMs to work in situ, querying and interpreting live data directly from PLM sources, ensuring accuracy and up-to-date insights.

- Composable Workflows MCP allows AI agents to participate in multi-system workflows, orchestrating tasks that touch PLM, ERP, and supply chain systems in sequence — such as running a design impact analysis that automatically triggers a supplier availability check.

- Accelerated Decision-Making By enabling AI agents to summarize change impacts, assess compliance risks, or compare part costs directly within the PLM context, MCP empowers faster and more informed decision-making.

Use Cases of MCP in PLM Context

Let’s explore some practical scenarios where MCP-connected AI assistants can add value in PLM environments:

1. Autonomous Design Reviews

An AI assistant can review a 3D CAD model within the PLM system, check for design rule violations, and even compare revisions. Using MCP, it can fetch component specs, flag deprecated parts, and summarize risks for the design lead — all while respecting access controls and contextual understanding of the design intent.

2. BOM Cost Analysis

Through MCP, a cost analysis AI agent can pull BOM data, connect it with supplier pricing (from ERP or procurement systems), and generate a cost breakdown. If certain components are above target price thresholds, it can recommend alternatives from approved vendor lists.

3. Procurement Task Assistance

MCP allows AI assistants to track part numbers across systems. For a product engineer requesting a part, the AI assistant can verify if the part is approved for use, check supplier lead times, and initiate a request for quote (RFQ) — all within a single conversation interface.

4. Change Impact Assessment

When a change order is initiated, MCP enables AI to assess the downstream impact — affected parts, linked drawings, suppliers, certifications, and more. AI Agent can generate a change summary and suggest stakeholders to notify based on historical impact patterns.

5. Compliance and Certification Checks

AI agents can use MCP to fetch relevant documents, check part materials against restricted substance lists (RoHS, REACH), and verify that all necessary documentation is linked before release to production.

6. Product Configuration Guidance

For companies using complex product configurations (CPQ), an AI assistant can guide sales or engineering through valid configurations, validate them against rules stored in the PLM system, and generate a custom BOM.

Pros and Cons of Using MCP to Connect LLMs to PLM Systems

✅ Pros

- Open and Interoperable As an open standard, MCP encourages ecosystem collaboration and avoids vendor lock-in.

- Improved Context Awareness Unlike generic APIs, MCP allows agents to understand the semantics and relationships in data — leading to more relevant and accurate responses.

- Scalable AI Integration Enterprises can integrate AI across multiple systems without redesigning their architecture.

- Enhanced Productivity Routine tasks like data entry, status checks, or analysis can be automated, freeing up engineers and managers for higher-value work.

- Security-First Design MCP includes mechanisms for enforcing user permissions and roles, ensuring that AI agents cannot access or misuse sensitive data.

⚠️ Cons

- Early Stage Adoption As an emerging standard, tooling and documentation may still be maturing, requiring early adopters to invest more in setup and integration.

- Complexity in Modeling Context Properly defining roles, schemas, and intents for context sharing can be complex in large enterprises with diverse systems.

- Performance and Latency Real-time interactions between AI agents and multiple backend systems can introduce latency unless optimized effectively.

- Governance Challenges Enterprises will need to define policies around AI-generated actions and ensure traceability and auditability, especially in regulated industries.

Conclusion: Embracing the MCP Revolution

The Model Context Protocol represents a foundational shift in how AI systems (LLMs) connect with enterprise data — especially in complex environments like PLM.

Instead of treating LLMs as external observers or disconnected copilots, MCP enables them to become intelligent collaborators inside the data ecosystem, deeply aware of the tasks, roles, and relationships that matter in product development.

For product manufacturers aiming to stay competitive in an era of smart products and smart processes, adopting MCP could unlock a new level of agility, collaboration, and decision intelligence.

While there are challenges to navigate, the benefits — especially when it comes to automating knowledge work and enhancing cross-functional integration — are too significant to ignore.

The future of PLM is not just digital — it’s intelligent. And with MCP, that future is closer than ever.

MechiSpike can be of great help to your organization to consult you on anything and everything related to PLM, Engineering and IT Digital. Click here to know more about us.

For Corporates :

MechiSpike can be of great help to your organization to help you improve your PLM ROI and 30% Savings, be it the hiring cost in staffing or setting up an ODC.

We do this with efficient planning, organizing and controlling Product Master data with seamless data exchange among Engineering, Manufacturing and Enterprise systems.

Why MechiSpike :

Niche Expertise in Engineering & IT

Our Speed of Hiring, Cost Optimized Solutions and Global Presence.

RightSourcing is ‘Better Outsourcing’, given to ‘NICHE EXPERTS’.

Click here to know how we can actually help you with our Proven Methodologies.

For PLM Careers :

Learn More | Earn More | Grow More

Interactive UI : Every Application will get a response with a recruiter contact details and the applicant will get a notification at each phase until the applicant is positioned well with our 15+ global clients in India, USA & Germany.

Candidate Referral Program : Refer a candidate and earn INR 25,000.

Mechispike Solutions Pvt Ltd is a PLM focused company, having all kinds of PLM projects to enable employee career growth and add value to clients. We can position you better with our 15+ global clients in India, USA & Germany.

We believe in “Grow Together” and “Employee First” culture.

Dream more than a Job. Grow your PLM Career to the Fullest with MechiSpike

Click Here to explore our Job Openings.

Subscribe Now :

Our mission : To equip you with the knowledge and tools you need to drive value, streamline operations, and maximize return on investment from your PLM initiatives.

PLM ROI Newsletter will guide you through a comprehensive roadmap to help you unlock the full potential of your PLM investment.

We are committed to be your trusted source of knowledge and support throughout your PLM journey. Our team of experts and thought leaders will bring you actionable insights, best practices, case studies, and the latest trends in PLM.

Subscribe Now to get this weekly series delivered into your Inbox directly, as and when we publish it.

To your PLM success!

Warm regards,